Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 11, 2010 20:26:13 GMT -8

This thread is for discussing the following:

1. Robots

2. Robotics - the study and development of robots

3. Artificial limbs

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 11, 2010 20:30:24 GMT -8

Cris wrote:

Eric,

We haven't talked about this.

In terms of robots and integrating robots I would caution against our focus on robots and robotics e.g. Battlestar Galactica or Caprica because I worry that it will become more about the scifi than about the core of MG3K.

That said, however, our world is becoming more and more technologically advanced. Good writing integrates all of the background tech, culture and more into a cohesive story. As long as robots don't become the focus of the story, then there is no reason that the tech can't advance as much or as little as we want it to.

Cris

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 11, 2010 20:31:55 GMT -8

Launa wrote: I saw a special recently about robots and how they're being used to help children with autism learn social cues. Instead of or partnered with human psychologists, the robot plays games with the child and talks with him/her. Because the robot is nonhuman and predictable, particularly children with autism tend to find it fascinating and nonthreatening. It makes logical sense. But as they play, the robot is programmed to read facial expressions, to get up and down like a human child would (for example, if knocked down, the robot pushes itself back up in the same way a human would) and so on. I guess they're having really amazing results in helping autistic children build social skills naturally. I thought it was a really interesting use for more complex AI.[/quote]

Maria responded: I am interested in this American television show that suggests we teach our very human autistic children to interact in a human manner by using inhuman robots. This seems a truly misguided cause and, as a mother of an autistic child, I would never allow social cues and conditioning to be imparted to my progeny by artificial intelligence. To that end, I do not think I would be comfortable with a robotic au pair or primary school instructor, either.

This idea of how extensively AI is used in the Mardi Gras 3000 universe is intriguing because it begs the question: What is the natural progression from where we are now? What are the tolerances and who among us will stand against this rise in robotic culture? I think where and who prickles with opposition will surprise us.

It seems that the charting of the future can be done relatively mathematically if we can chart a ten or hundred year growth pattern for the technologies or practices at the core of the arena we are charting. Then to factor an expected ratio for leaps forward or backward based on human revelation or human error.

The first step for charting a realistic, or at least interesting and engaging, MG3K future culture would be to first pinpoint what are the major arenas that make a culture.

Machines and how human beings (and Humans) interact with them is certainly one arena.

Estella responded: Are we to speak in regard to true robotics, cybernetics, or bionetics? The physically embodied programs that Launa is speaking of and Teresa posted are cybernetics. They are adaptive and driven by metamorphic code. Robotic technology, on the other hand, are what built your Hummer. Then you have bionetics which would be everything from a pacemaker to the "lace" once discussed here on the forum.

Would all of these be subcategories within the overall category or arena entitled Machines? Or would they be medicine? Or technology as a whole?

Give me an example perhaps of what you see as these fundamental building blocks.

Cris responded: I agree it's important to distinguish the kind of AI we're talking about here. There's a difference between bionetics that make an artificial limb possible as well as "lace" and other medical technologies. Those things are well on their way, but only for those who can afford it. Certainly that technology isn't available for the mainstream individual with basic health coverage.

Cybernetic technology is more "hot button" and where Leigh's post recalling Aristotle (see Prime Time: Cornerstone: Growth) comes into play. Passion, desire, habit, reason, nature, compulsion and chance. All of these will inform how the more controversial technologies are integrated into MG3K.

Thank you for clarifying this, Estella.

Cris continued: I have to agree with Maria here. There is no way I would let a robot "teach" my child what kind of responses are socially acceptable. I find the idea incredibly stupid. My autistic son doesn't lie, cheat, obfuscate, withhold affection, manipulate others... so many "wonderful" qualities that our children learn every day in our mainstream public school culture.

The past three months of tutoring students in the public elementary schools around here has only reinforced my idea that if anything, we need more teachers and tutors and other individuals who can step in to put a band-aid on this system until it's overhauled.

The last thing we need is robots teaching our children how to behave. Who decides that, anyway?

Launa responded: I think I wasn't very clear in my original explanation. I personally agree that I wouldn't want a robot instructing my child about how to behave and I also think this little robot is being spun as higher technology than it is, I just found the story interesting. For better or worse if this kind of technology is current enough and deemed accessible enough to the public to be on a medical talk show, I think the ideas presented by this technology might be something we'll have to incorporate into humanity's future.

These robots are not teaching the children in the sense of a teacher to a student. They look like toys. They play games. They are adaptive and are able to read facial expressions, access the internet and read emotions. They even dance.

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 11, 2010 20:42:28 GMT -8

Launa wrote:"Meet Nao, a robot providing a revolutionary treatment for autistic children. Manufactured by Aldebaran Robotics in France, the small robot is capable of autonomic movement, face and voice recognition and is equipped with extensive programming to support and facilitate social interaction. A pervasive developmental disorder, autism refers to a group of ailments that cause delays in the development of basic skills. Most children afflicted with autism have difficulty relating to other people and understanding social cues, such as facial expressions and body language. Common symptoms of the disorder include loss of skill sets such as talking, slowed language development, odd movements such as hand flapping and toe walking, repetitive behavior, anxiety, a high pain threshold, difficulty relating to others and a lack of eye contact and response to vocal commands. The Nao robot provides predictable and repetitive behaviors, which in turn improves a child’s social interaction skills with people. Cedric Vaudel, manager of Aldebaran Robotics, says the Nao robot can understand its environment, pick itself up, negotiate its way around objects and is capable of advanced functionality. “Everything is possible, it’s just a matter of imagination.” I was wondering if the intentions behind this kind of work would be at all important in the near future. What kind of implications this might have. Brianne responded: Launa, Maria and Cris, I find the idea of these Nao Robots very intriguing. Machines that adapt (which is called Machine Learning are, simply, incredible. But what do we call these robots? Are they toys? Are they machines? More at www.aaai.org/AITopics/pmwiki/pmwiki.php/AITopics/MachineLearning ) A lot of questions have to be asked, and most of them asked to yourself as a person. As a parent. Do I hold this (machine? toy?) responsible for my child? Responsible for my child's learning? I take a look at myself. I'm very much looking forward to being a parent in the future. I live with, and help care for, a 7 year old and a 10 year old (who is autistic). I would consider this, the Nao Robot, a toy. A learning toy. I would hold it no more responsible for my child's learning than I would hold a LeapPad ( www.amazon.com/LeapFrog-30004-LeapPad-Learning-System/dp/B00003GPTI ) responsible for teaching my child to read. *I* am responsible for that. Would I rather teach my child to read? Yes. Would I rather teach my child social cues, in just the same way that the Nao Robot does (The Nao robot provides predictable and repetitive behaviors, which in turn improves a child’s social interaction skills with people.)? Yes. However... when Mommy is working, and not available to be actively playing with the child, I would rather he or she be playing with a learning toy, like the LeapPad, than playing a video game or watching television or a movie. Basically, what I'm saying is that I think the Nao Robot is an incredible learning toy. But not more than a toy.

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 11, 2010 20:46:52 GMT -8

Brianne wrote: More about the Nao Robot: Early ideas at pricing have come in at about $4,200 to $4,900 dollars. It's available starting in 2011 from Aldebaran Robotics. He's 23 inches tall. Here's a pic of the little guy:  And a video... Brianne |

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 13, 2010 11:54:43 GMT -8

10-21-09 EJ wrote: I know this may sound totally off-the-wall random, but I just stumbled upon the teen (not the pre-teen) versions of Neon Genesis Evangelion and they *rock.* Does anyone know these? How might we be able to work most awesome giant robots with people piloting them into our universe? That theme is common is many places... and I finalyl have fallen for it. Oooo... I'm in love! 10-22-09 Jennifer replies: EJ, Cris says: In the End Times, baby. In the End Times. Angels of Lucifer fighting Angels of the Lord in giant robots with wings. Oooo yeah. Btw, I love NGE, too.  I also discovered the NGE teen graphic novels a few years back. Have the first two or three. Had seen a few episodes of the show (pre-teen) and really didn't like it (way too young) but the GN were fascinating and detailed. Not sure how we'd work that tech into the universe... oh wait. Yeah, actually, I do. Soldiers. War. Think WarHammer. Smaller scale. Also, construction workers. Miners. Late Prime Time. *sigh* All this talk of robots makes me want my little sweeper bot again! Jennifer 10-25-09 EJ replies: Jennifer! You used Mechs in "The Taste of Sunlight" fan fiction!!! Thank you!

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 13, 2010 12:16:20 GMT -8

12-3-09, Launa writes: Hey, you guys hear about this? I saw it on the News today. Had to post it.

Experts: Man controlled robotic hand with thoughts

By ARIEL DAVID (AP) – 13 hours ago

ROME — An Italian who lost his left forearm in a car crash was successfully linked to a robotic hand, allowing him to feel sensations in the artificial limb and control it with his thoughts, scientists said Wednesday. During a one-month experiment conducted last year, 26-year-old Pierpaolo Petruzziello felt like his lost arm had grown back again, although he was only controlling a robotic hand that was not even attached to his body.

"It's a matter of mind, of concentration," Petruzziello said. "When you think of it as your hand and forearm, it all becomes easier." Though similar experiments have been successful before, the European scientists who led the project say this was the first time a patient has been able to make such complex movements using his mind to control a biomechanic hand connected to his nervous system. The challenge for scientists now will be to create a system that can connect a patient's nervous system and a prosthetic limb for years, not just a month.

The Italy-based team said at a news conference in Rome on Wednesday that in 2008 it implanted electrodes into the nerves located in what remained of Petruzziello's left arm, which was cut off in a crash some three years ago. The prosthetic was not implanted on the patient, only connected through the electrodes. During the news conference, video was shown of Petruzziello as he concentrated to give orders to the hand placed next to him.

During the month he had the electrodes connected, he learned to wiggle the robotic fingers independently, make a fist, grab objects and make other movements. "Some of the gestures cannot be disclosed because they were quite vulgar," joked Paolo Maria Rossini, a neurologist who led the team working at Rome's Campus Bio-Medico, a university and hospital that specializes in health sciences.

The euro2 million ($3 million) project, funded by the European Union, took five years to complete and produced several scientific papers that have been submitted to top journals, including Science Translational Medicine and Proceedings of the National Academy of Sciences, Rossini said. After Petruzziello recovered from the microsurgery he underwent to implant the electrodes in his arm, it only took him a few days to master use of the robotic hand, Rossini said. By the time the experiment was over, the hand obeyed the commands it received from the man's brain in 95 percent of cases.

Petruzziello, an Italian who lives in Brazil, said the feedback he got from the hand was amazingly accurate. "It felt almost the same as a real hand. They stimulated me a lot, even with needles ... you can't imagine what they did to me," he joked with reporters. While the "LifeHand" experiment lasted only a month, this was the longest time electrodes had remained connected to a human nervous system in such an experiment, said Silvestro Micera, one of the engineers on the team. Similar, shorter-term experiments in 2004-2005 hooked up amputees to a less-advanced robotic arm with a pliers-shaped end, and patients were only able to make basic movements, he said.

Experts not involved in the study told The Associated Press the experiment was an important step forward in creating a viable interface between the nervous system and prosthetic limbs, but the challenge now is ensuring that such a system can remain in the patient for years and not just a month. "It's an important advancement on the work that was done in the mid-2000s," said Dustin Tyler, a professor at Case Western Reserve University and biomedical engineer at the VA Medical Center in Cleveland, Ohio. "The important piece that remains is how long beyond a month we can keep the electrodes in."

Experts around the world have developed other thought-controlled prostheses. One approach used in the United States involves surgery to graft shoulder nerves onto pectoral muscles and then learning to use those muscles to control a bionic arm. While that approach is necessary when the whole arm has been lost, if a stump survives doctors could opt for the less invasive method proposed by the Italians, connecting the prosthesis to the same system the brain uses to send and receive signals.

"The approach we followed is natural," Rossini said. The patient "didn't have to learn to use muscles that do a different job to move a prosthesis, he just had to concentrate and send to the robotic hand the same messages he used to send to his own hand." It will take at least two or three years before scientists try to replicate the experiment with a more long-term prosthesis, the experts said. First they need to study if the hair-thin electrodes can be kept in longer.

Results from the experiment are encouraging, as the electrodes removed from Petruzziello showed no damage and could well stay in longer, said Klaus-Peter Hoffmann, a biomedical expert at the Fraunhofer-Gesellschaft, the German research institute that developed the electrodes.

More must also be done to miniaturize the technology on the arm and the bulky machines that translate neural and digital signals between the robot and the patient. Key steps forward are already being made, Rossini said. While working with Petruzziello, the Italian scientists also were collaborating on a parallel EU-funded project called "SmartHand," which has developed a robotic arm that can be directly implanted on the patient.

Copyright © 2009 The Associated Press. All rights reserved.

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 13, 2010 12:17:13 GMT -8

12-7-09 Jennifer writes: This is a great step in the research arena that marries our nervous system with hardware. Everyone may remember the discussion of the hardware/wetware connection and divide that was posted on the forum quite some time ago. Unlike what some members though originally, the hardware/wetware connection is no longer about "any system where a human being uses technology" but rather a literal, biological connection/interface between hardware and the interior of the human body (wetware).

For members following this research or reading way too much Stross (LOL! Is there ever too much Stross?), you'll see that this is the technology that will lead to implants -- not just the robotic and cybernetic type but rather the interface type such as HUDs, feedlinks, etc.

Our mind, after all, is just pulses of energy and since the best technology mirrors nature (ie our body and its systems) the idea that these two elements might mesh shouldn't be surprising.

Again, great to know that progress is being made.

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 13, 2010 12:19:35 GMT -8

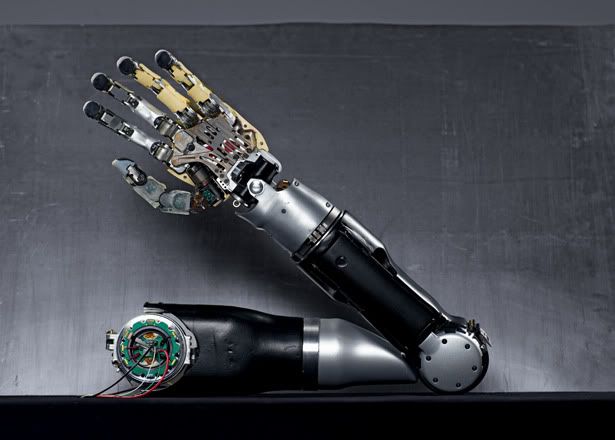

12-17-09, Jennifer writes: For those of you who snuck a peek at the Crusade Battle Anthology and the Player's Handbook, you enjoyed a two-part story by Sha that featured the character Siroun Derdurian, who, after *something* dramatic and horrible occurred, came to have *most* of her body fitted with cybernetics. This *ultimate* bridging of the hardware/wetware divide may not be as wet as once imagined. The further development of biofeedback and the sensing of electromagnetic impulses *through* the skin (without breaking the skin) continues. The cover story in January's National Geographic has amazing pictures but the article can be found online at ngm.nationalgeographic.com/2010/01/bionics/fischman-text/1Some of the images (and others) can be found at ngm.nationalgeographic.com/2010/01/bionics/thiessen-photographyHere is what the arm looks like:  Siroun's unique condition is less science fiction and more science fact. It simply means that she had incredible health insurance coverage!

|

|

Cris

MG3K Forum Member

Editor & Writer}}OfficialWordCount{4887} FanFiction{0} Awards{8}

Homidin: teh sc?o, teh torox, teh antha.

Posts: 506

|

Post by Cris on Apr 13, 2010 14:19:13 GMT -8

4.1.10 at 12:32am Brianne wrote:Now, while France has been busy with the Nao Robot, check out what Japan's been working on... Yes, that's right. Androids. From what I've seen on YouTube, the best of the androids are Actroids. Here's what wikipedia has on them (from en.wikipedia.org/wiki/Actroid ): Internal sensors allow Actroid models to react with a natural appearance by way of air actuators placed at many points of articulation in the upper body. Early models had 42 points of articulation, later models have 47. So far, movement in the lower body is limited. The operation of the robot's sensory system in tandem with its air powered movements make it quick enough to react to or fend off intrusive motions, such as a slap or a poke. Artificial intelligence possessed by the android gives it the ability to react differently to more gentle kinds of touch, such as a pat on the arm. The Actroid can also imitate human-like behavior with slight shifts in position, head and eye movements and the appearance of breathing in its chest. Additionally, the robot can be "taught" to imitate human movements by facing a person who is wearing reflective dots at key points on their body. By tracking the dots with its visual system and computing limb and joint movements to match what it sees, this motion can then be "learned" by the robot and repeated. The skin is composed of silicone and appears highly realistic. The compressed air that powers the robot's servo motors, and most of the computer hardware that operates the A.I., are external to the unit. This is a contributing factor to the robot's lack of locomotion capabilities. When displayed, the Actroid has always been either seated or standing with firm support from behind. The interactive Actroids can also communicate on a rudimentary level with humans by speaking. Microphones within those Actroids record the speech of a human, and this sound is then filtered to remove background noise - including the sounds of the robot's own operation. Speech recognition software is then used to convert the audio stream into words and sentences, which can then be processed by the Actroid's A.I. A verbal response is then given through speakers external to the unit. Further interactivity is achieved through non-verbal methods. When addressed, the interactive Actroids use a combination of "floor sensors and omnidirectional vision sensors" in order to maintain eye contact with the speaker. In addition, the robots can respond in limited ways to body language and tone of voice by changing their own facial expressions, stance and vocal inflection. The original Repliee Q1 had a "sister" model, Repliee R1, which is modeled after a 5-year-old Japanese girl. More advanced models were present at Expo 2005 in Aichi to help direct people to specific locations and events. Four unique visages were given to these robots. The ReplieeQ1-expo was modeled after a presenter for NHK news. To make the face of the Repliee Q2 model, the faces of several young Japanese women were scanned and the images combined into an average composite face. The newer model Actroid-DER2 made a recent tour of U.S. cities. At NextFest 2006, the robot spoke English and was displayed in a standing position and dressed in a black vinyl bodysuit. A different Actroid-DER2 was also shown in Japan around the same time. This new robot has more realistic features and movements than its predecessor. In July 2006, another appearance was given to the robot. This model was built to look like its male co-creator, roboticist Hiroshi Ishiguro, and named Geminoid HI-1. Controlled by a motion-capture interface, Geminoid HI-1 can imitate Ishiguro's body and facial movements, and it can reproduce his voice in sync with his motion and posture. Ishiguro hopes to develop the robot's human-like presence to such a degree that he could use it to teach classes remotely, lecturing from home while the Geminoid interacts with his classes at Osaka University. Brianne

|

|